- 易迪拓培训,专注于微波、射频、天线设计工程师的培养

视频编码、SoC 开发与 TI DSP 架构(英)

H.264 provides similar visual quality to the MPEG2 video standard at half the bit rate. However, the H.264 video algorithm is highly complex compared to MPEG2 in terms of computation complexity as well as data bandwidth requirement. In fact, H.264 encoding and decoding is so complex it is not uncommon to benchmark a given SoC by its capability to support high definition encoding /decoding of video content in H.264 video format.

This article provides a brief overview of the basic video encoder and challenges and considerations to provide the optimal video encoding solution for a given application segment, including choosing the right SoC architecture, software challenges, visual quality tradeoffs, scalability, ease of integration and time to market.

Tuning the encoder for each application

High definition video encoding applications (720p, 1080i) are growing rapidly in the consumer market space. The application spectrum ranges from low to high-end applications including digital still cameras, surveillance, videophone, and digital TV broadcasting. The overall system requirements, acceptable limitations and various tradeoffs across these applications vary a lot.

The main parameters for considerations are the visual quality, cost of solution, ability to support multiple video formats, power consumption and communications delay.

Let’s consider a few examples: For the Broadcast market, video quality is the main consideration, while the Digital camera market is very cost sensitive for each unit sold. Ability to support multiple video formats is a primary requirement for the IP set-top box market, while delay is one of important consideration for video conferencing. The list just goes on. The video encoder solution has to be tuned based on application constraints for optimal performance.

Complexity challenges

Video algorithms require tremendous amount of computation power and data bandwidth. This complexity depends on encoding vs. decoding mode, video standard, resolution, frame-rate and visual quality constraints. Typically encoding is more complex than decoding by a factor of about 3-5. The complexity scales directly with resolution and frame-rate requirement. High definition encoding (e.g. 720p, 1080i @ 30 fps) with H.264 format is the most difficult scenario for all aspects of SoC and product development.

High visual quality requirement for encoding needs sophisticated set of algorithms, which comes at the expense of computation complexity and data bandwidth. Typically H.264 HD encoding will need data bandwidth in the range of 2-3 Gbits/second for 720p@30 fps. Also, computation power requirement will easily go in the range of GFLOPS based on actual encoding algorithm. This puts tremendous pressure of on SoC architecture & design as well as software optimizations to enable the HD encoding solution.

Choosing architectures

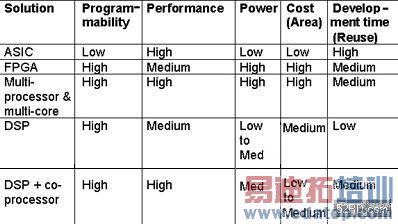

The video standards (H.264, MPEG) are getting better in terms of compression efficiency, and there are a host of other proprietary standards that given silicon has to support based on target application e.g. Real Video, Window Media, On2 video etc. All these dynamics will require programmable solutions to keep pace with arrival of new standards and features. The performance requirements in terms of frame rate, resolution and visual quality are going northwards day-by-day. Traditionally ASIC solutions are used to meet higher performance requirements, but they lack programmability to address dynamics in video market space. For the consumer market, cost is one of the major criteria which determines the success of given silicon. In mobile space, the power consumption is one of the most important criteria as it determines battery life. The following table summarizes various SoC architecture tradeoffs.

Table 1: SoC table

Texas Instruments DSP and Co-Processor

The TMS320C64x+ family is fully programmable DSP designed to cater to video applications. The C64x/C64x+ DSP core enables high-end video applications for set-top box, personal video recorder, high-end cell phones etc. The need to support higher performance can be catered to by increasing clock frequency or using multiple DSPs. The disadvantage of these approaches is increased silicon area (hence the cost) and high power requirement.

The other alternative is the addition of a special purpose co-processor to improve imaging and video performance at lower cost and power. This approach has advantages of both worlds i.e. full programmable DSP as well as ASIC solutions. The co-processors are used to boost video and imaging performance by providing more processing power and special purpose dedicated hardware (e.g. support of VLC/VLD in hardware). These co-processors operate at different clock speed and require lesser silicon area. The video and imaging applications using co-processors will consume less power. DavinciTM from Texas Instruments falls into this category.

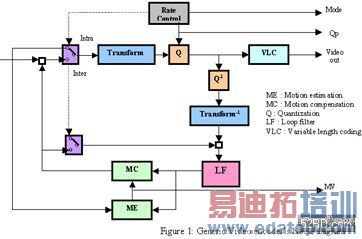

Introduction to the video encoder

Fig. 1 shows a block diagram of a generic video encoder. Motion-estimation is used to find motion of macro-blocks using motion vectors to reduce temporal redundancies among input frames. Later, transform (mostly Discrete Cosine Transform: DCT) is performed on the motion-compensated prediction difference frames for de-correlation of prediction error. The prediction error is later quantized as per input bit-rate requirements. The quantized DCT coefficients, motion vectors, and side information are entropy coded using variable length codes (VLC’s). The reconstruction path in encoder consists of inverse transform, quantization, loop filter and motion compensation to mimic operation on decoder side.

View full size

Figure 1: Generic video encoder block diagram

The various existing video standards follow the same block diagram, but differ in flexibility and details within each block. The following table summarizes the difference between MPEG2 main profile (MP), MPEG4 Advanced simple profile (ASP) and H.264 high profile (HP).

Table 2: MPEG2, MPEG4, H.264 table

Typical Video SoC architecture

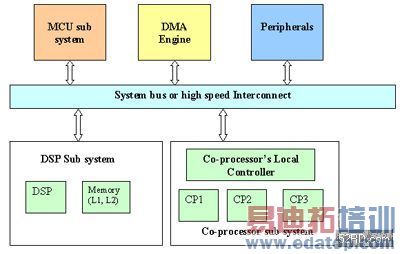

Figure 2 shows a block diagram of typical video SoC using DSP and co-processor. The SoC consists of various sub-systems to cater various functionalities.

- The DSP sub-system consists of DSP, L1 and L2 memory. DSP sub-system handles video and audio algorithms.

- The Co-processor sub-system consists of controller and various co-processors. Co-processor sub-system accelerates the performance video and imaging algorithms e.g. MPEG4, H.264 etc by providing dedicated processing power. Fully programmable (mainly Single Instruction Multiple data: SIMD) and dedicated co-processors are used based on application requirements.

- Video algorithms typically needs large amount of data movement across SoC. The DMA engine takes care of data movements inside and outside a given SoC. Usually DMA engines support various data movement options to help video and imaging application (e.g. 2D to 2D transfers, etc.)

- The given SoC contains an image pipe to interface with the camera unit (e.g. CCD, CMOS), various peripherals to address various storage devices (e.g. HDD, DVD etc.), and dedicated interfaces (e.g. USB, Ethernet, and HDMI etc.) based on a given application.

Figure 2: Generic Video SoC

Software development flow

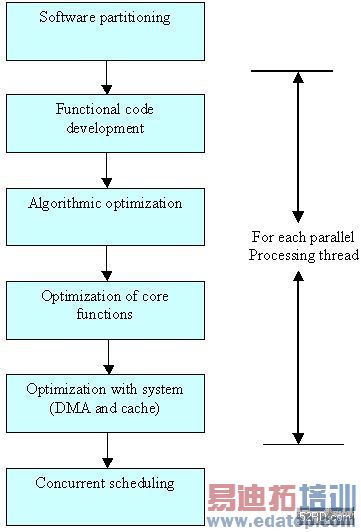

Figure 3 shows typical software optimization flow on given architecture. The first stage is mapping of given video algorithm on DSP and co-processor, which is carried out in software partitioning stage. The optimal performance is achieved by means of right software partitioning among various available options.

Figure 3: Software optimization flow

The basic development starts with writing sequential code utilizing underlying architecture (i.e. DSP and co-processors). The basic functional flow is verified with a set of conformance streams and additional video streams. The next stage is profiling the code and identifying cycle intensive functions. Based on feedback of profiling results, algorithmic and processor specific optimizations are carried out.

Algorithmic optimization involves finding fast algorithms for motion estimation, mode decisions, rate control, transform etc. In many cases, such fast algorithms need to tradeoff visual quality to reduce complexity. The next stage is optimization of the selected algorithm for the underlying architecture (i.e. DSP and co-processors). There exist various optimization techniques (e.g. loop unrolling, software pipelining, etc.) to reduce the cycles requirements of given algorithms. (Readers can refer to standard literature in DSP textbooks and application notes provided by semiconductor vendors to gain more insights.)

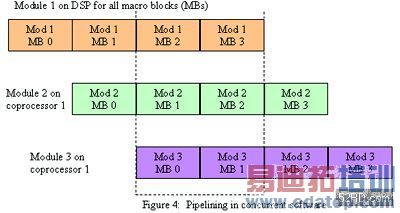

Next the software needs to be optimized for a given SoC to reduce overhead of data bandwidth by using DMA and code placement to minimize cache penalties. This process is repeated for each parallel thread. In case of dedicated co-processors, some steps described above are not applicable. The final optimization stage consists of concurrent scheduling of all parallel threads. This is done by means of pipelining technique implemented in software as shown in Figure 4. One important consideration is the reduction of communication overhead among various concurrent threads. The local co-processor controller can be utilized for this purpose. It will reduce interactions at low level (e.g. Macro block) happening at high frequency. This improves overall system performance.

Figure 4: Pipelining in concurrent software

Video Quality Considerations

For video encoding there are many alternatives in the hands of algorithm designers. All available options will produce content that is compliant to a given standard, but differing in visual quality. The algorithm designer needs to choose the right combination that will produce the best possible visual quality subject to constraint of computation complexity.

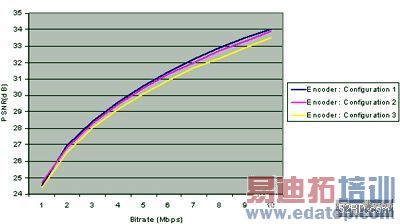

There are various metrics available to measure visual quality. The subjective video quality measurement (as per ITU recommendation) is the most reliable method, but it is time consuming. On the other hand there are objective measures. The most widely used measure is PSNR (peak signal to noise ratio), which is easy to compute and enables faster development time.

The most computationally complex and data guzzling block in the video encoder is motion estimation (ME). Usually algorithm designers use the faster algorithm with intelligent search mechanism to reduce the complexity of this block. This comes at the expense of visual quality. Similarly, there are fast algorithms for mode decisions (intra vs. inter, inter modes, intra modes selection) which affect visual quality. Algorithm designers use intelligent mode decision algorithm instead of brute force method of trying out all combinations and selecting the best result to reduce the complexity of the video algorithm.

Finally the algorithm designers pick up a rate control scheme that suites the application, e.g. tuned for low delay video communication, for local storage, for offline processing etc.

These tradeoffs depend on various factors like resolution, bit-rate, frame rate, application scenarios etc. For High definition, the tradeoffs will be different compared to low resolution (e.g. CIF resolution) e.g. quality gain due to 16 motion vectors will vary less in high definition resolution. In algorithm optimization phase, the algorithm designer carries out these tradeoffs and selects the best combination for the application and architecture.

Typically, each vendor provides PSNR plots (PSNR vs. Bit-rate for given resolution and fps) as shown below for standard sets of video test sequences (e.g. Foreman, mobile, Akiyo, etc.). It should be observed that right selection of the mode decisions schemes, fast ME algorithms and rate control mechanism are the secret sauce of producing good visual quality. These tradeoffs in video encoding are the main differentiating factor among various solutions, which every vendor keeps to its heart as intellectual property (IP).

Table 3: Typical PSNR plots

Integration and scalability

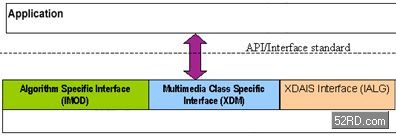

One of the important factors to enable faster time to market is less integration time for the video algorithms on a customer system. To have smooth integration, it is imperative that a given video encoder follow standards for API/interface. Texas Instruments has defined an Express DSP Algorithm Standard (xDAIS) to handle integration issues. This standard enables co-existence of multiple algorithms and multiple instances of algorithms in a given system. It also provides a standard way to access hardware resources (e.g. DMA). Recently, Texas Instruments defined enhancements named Express DSP Digital Media (XDM), which are built on top of xDAIS to enable plug and play architecture for multimedia algorithms as shown in figure 5. This also enables a scalable model in which it is easier to add newer video algorithms to a given system, or migration to next generation devices may be done with minor changes in application level software.

Figure 5: XDM Interface

Conclusion

Video encoding will continue as a hot area in academia and industry due to the arrival of newer standards, movement to high definition content, emergence of new applications (e.g. transcoding) and consolidation of existing applications. The importance of software optimization and modeling tools will increase as complexity of SoC increases. The ability to figure out the right mix of all these factors will be crucial to the success of a given application.